AI NEEDS GIANT ELECTRICITY

By Aurel Stratan editor, Rudeana SRL-D

ENERGYCENTRAL - Aug 1, 2024 - Annual electricity consumption by artificial intelligence (AI) is surging at an astonishing rate and by 2027 it will compare to that of Argentina, the Netherlands, or Sweden.

The lead author, data scientist Alex de Vries at Vrije Universiteit Amsterdam in the Netherlands, predicts that within four years the AI server farms of giants like OpenAI, Google or Meta could consume anywhere from 85 to 134 terawatt hours of energy per year.

This would account for 0.5% of the entire global energy demand and would leave a deep carbon footprint in the planet’s environment.

This situation is reminiscent of the much-criticized power consumption of the crypto industry in recent years.

How consumption was measured

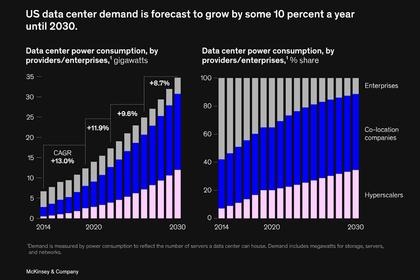

AI chatbots such as ChatGPT and Bard are known as voracious consumers of electricity and water. More precisely, it is the colossal data centers powering them that are to blame and there are no signs of slowing down.

Determining the exact energy consumption of AI companies like OpenAI is challenging due to their secrecy about these figures. De Vries estimated their energy usage by examining the sales of Nvidia A100 servers, which make up an estimated 95 percent of the infrastructure that supports the AI industry.

AI encompasses a variety of technologies and methods that enable machines to exhibit intelligent behavior. Within this realm, generative AI is used to create new content such as text and images. These tools utilize natural language processing and share a common process: initial training followed by inference.

The training phase of AI models, often considered the most energy-intensive, has been a focal point in sustainability research. During this phase, AI models are fed extensive datasets, adjusting their parameters to align predicted output with target output.

For instance, Hugging Face's BLOOM model consumed 433 MWh of electricity during training. Other large language models like GPT-3, Gopher, and OPT reportedly used 1,287, 1,066, and 324 MWh for training, respectively, due to their large datasets and numerous parameters.

But after training, these models enter the less-studied inference phase, which generate outputs based on new data. For a tool like ChatGPT, this phase involves creating live responses to user queries.

According to Google, 60% of AI-related energy consumption between 2019 and 2021 stemmed from inference, and this fact attracted the Dutch researcher’s attention about its costs compared to training.

The inference phase is relatively less explored in the context of AI's environmental sustainability. However, indications suggest that its energy demand can be significantly higher than the training phase, as OpenAI required an estimated 564 MWh per day for ChatGPT's operation.

The energy consumption ratio between these phases remains an open question, requiring further examination.

Future energy footprint

The AI boom in 2023 has led to an increased demand for AI chips. NVIDIA, a chip manufacturer, reported a record AI-driven revenue of 13.5 billion dollars in the second quarter of 2023. The 141% increase in the company's data center segment underscores the growing demand for AI products, potentially leading to a significant increase in AI's energy footprint.

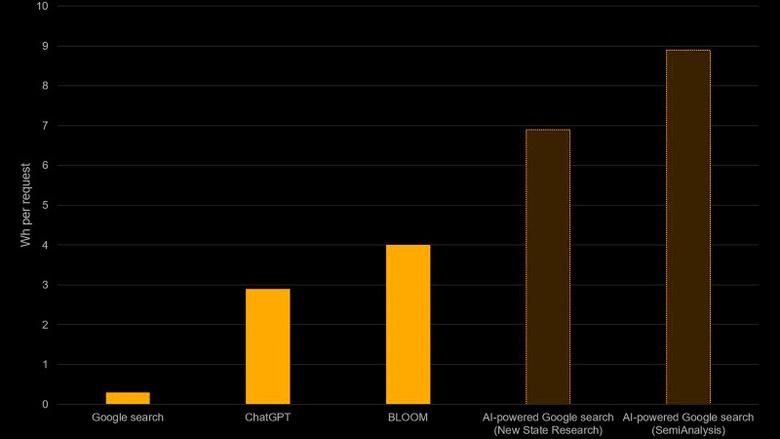

If generative AI like ChatGPT were integrated into every Google search, it could lead to substantial power demand, as per research, whose author suggest that such a scenario could require more than half a million of NVIDIA’s A100HGX servers (4.1 million CPUs), resulting in massive electricity consumption.

"At a power demand of 6.5 kW per server, this would translate into a daily electricity consumption of 80 GWh and an annual consumption of 29.2 TWh. New Street Research independently arrived at similar estimates, suggesting that Google would need approximately 400,000 servers, which would lead to a daily consumption of 62.4 GWh and an annual consumption of 22.8 TWh. With Google currently processing up to 9 billion searches daily, these scenarios would average to an energy consumption of 6.9-8.9 Wh per request," the Dutch scientist calculated.

Obligating AI companies to report their electricity and water consumption might be the first step to address the situation. California governor Gavin Newsom, for example, signed this October two major climate disclosure laws, forcing around 10,000 AI companies – including IT giants like OpenAI and Alphabet – to disclose how much carbon they produce by 2026.

-----

This thought leadership article was originally shared with Energy Central's Load Management Community Group. The communities are a place where professionals in the power industry can share, learn and connect in a collaborative environment. Join the Load Management group today and learn from others who work in the industry.

-----

Earlier: